1 of a 2 part series

Table of Contents

Open Table of Contents

- Introduction

- The Problem Before Projects

- The Projects Solution

- Financial Benefits for OpenAI (Cost Savings via Disk Offloading)

- Workflow Optimization: Best Practices

- How to Use Projects Effectively

- Token Usage and Cost Estimates

- Best Practices for Leveraging OpenAI Tools

- Practical Example: AI-Powered Collaboration in Action

- Frequently Asked Questions (FAQ)

- Why It’s a Win Win

- Conclusion

- References

- Related Sources

- Support My Work

Introduction

AI tools have empowered creators and professionals, but for many independent publishers, the challenges of managing complex workflows have been a barrier to entry. OpenAI’s Projects feature redefines this landscape, offering a breakthrough solution for long-form tasks. By enabling persistent context retention, it transforms workflows for everyone from novelists to developers—allowing creators to focus on their craft rather than repetitive groundwork. Learn more about this feature in OpenAI’s official documentation.

Have you ever struggled with AI tools losing context on long documents? Imagine never needing to re-explain your ideas or split your projects into smaller parts again. OpenAI’s Projects feature is a game-changer, revolutionizing workflows for users while simultaneously optimizing OpenAI’s infrastructure. This article explores how Projects solves major user challenges, its underlying technical innovation, and its financial and strategic impact. By focusing on workflow optimization and leveraging persistent storage, it ensures scalable, efficient solutions for professional and enterprise needs.

The Problem Before Projects

AI Challenges and Workflow Inefficiencies

What are the Challenges of Managing Long-Form Workflows?

- Context Window Limitations (Glossary):

- AI tools like GPT-4 have a token limit of approximately 8,000 tokens, equivalent to about 6,000 words.

- Tasks often needed to be split into smaller chunks, leading to workflow inefficiencies and loss of persistent context.

- User Frustrations:

- Writers constantly summarized earlier drafts to maintain coherence.

- Developers reloaded context for large codebases, leading to redundant work.

- Researchers struggled to manage fragmented insights across multiple sessions.

- Technical Constraints:

- Maintaining context in RAM required high memory usage, inflating costs and limiting scalability.

- Manual Effort and Time Costs:

- Without Projects, users spent significant time duplicating effort—re-uploading files, re-explaining their objectives, and reorganizing fragmented materials.

- These inefficiencies not only increased workload but also introduced higher risks of inconsistency and lost insights.

The Projects Solution

Enterprise-Grade AI Solutions for Workflow Optimization

How Does OpenAI’s Projects Feature Solve These Problems?

OpenAI’s Projects feature enables users to upload files and associate chats with specific tasks. Learn more about dynamic referencing in AI systems in this technical paper. By leveraging disk I/O and persistent storage, Projects ensures that historical context remains accessible across sessions. This innovation optimizes workflow efficiency while reducing infrastructure demands.

Dynamic referencing is a key component of this feature, allowing data to be fetched from disk storage rather than requiring it to remain in live memory (RAM). This process ensures that even extensive datasets or long documents can be quickly accessed without overburdening system resources, enabling seamless transitions between sessions. By utilizing disk I/O, Projects dynamically updates references as files change, maintaining a consistent and reliable workflow.

Benefits of OpenAI’s Projects Feature:

- Seamless Workflow Optimization:

- Long documents and projects can now be handled without context loss.

- Improved Consistency:

- Projects maintain tone, style, and accuracy across extended tasks.

- Professional Scalability:

- Writers, developers, and researchers can now manage long-form workflows effortlessly.

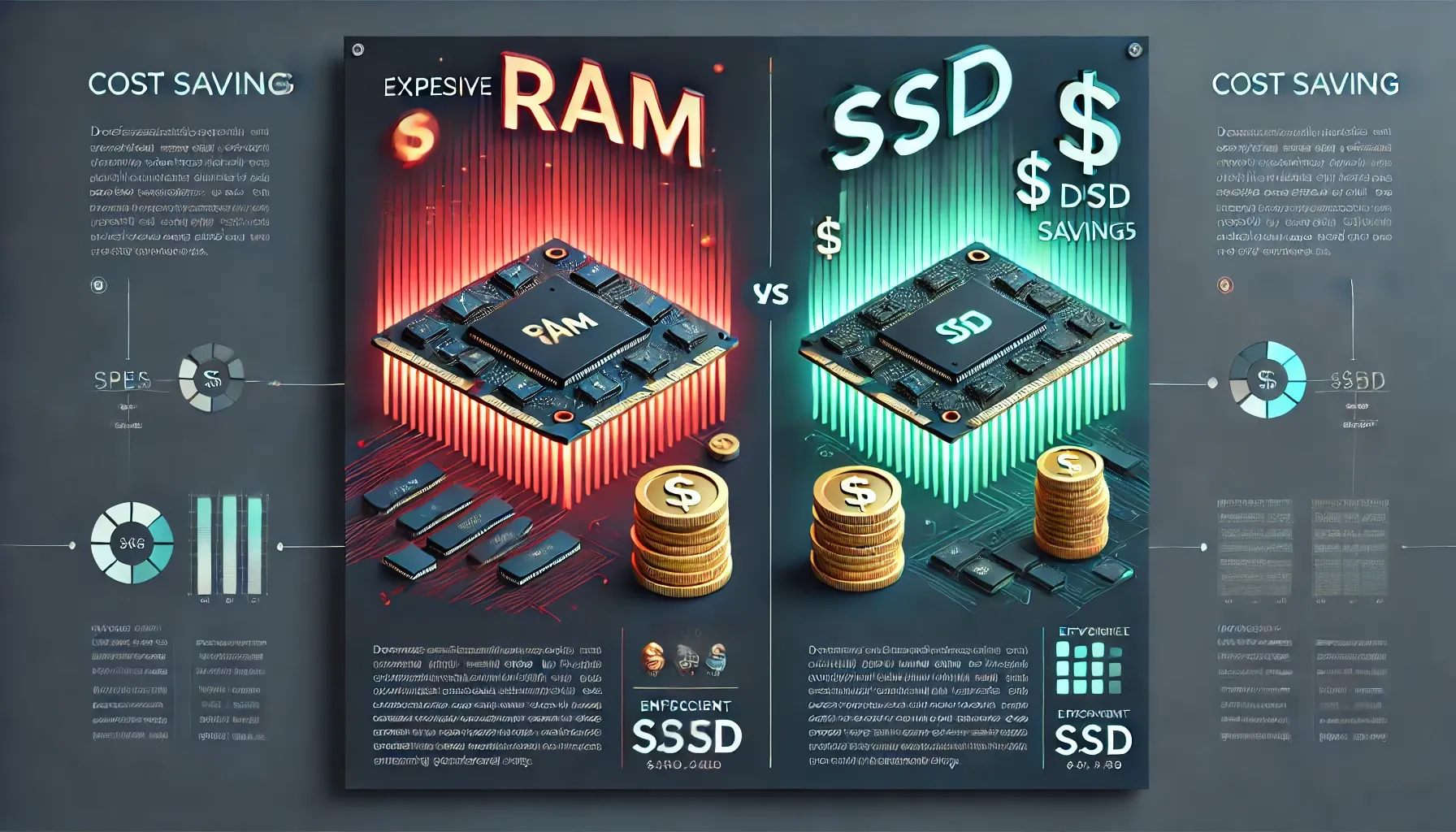

Financial Benefits for OpenAI (Cost Savings via Disk Offloading)

OpenAI’s Projects feature delivers substantial cost efficiencies by leveraging disk I/O instead of RAM for context storage. By offloading much of the memory burden onto more affordable disk resources, OpenAI can significantly cut infrastructure expenses while maintaining robust, scalable services. By focusing on cost reduction and infrastructure savings, this approach provides a sustainable model for long-term operational efficiency.

Cost Savings via Disk Offloading

RAM vs. Disk Storage Costs:

Enterprise-grade RAM can cost between $10-$20 per GB/month, whereas SSD storage ranges from $0.10-$0.25 per GB/month. This disparity in pricing translates into considerable savings when large-scale context data is stored on disk rather than in memory.

Example Calculation:

- Without Projects: 10 million users × 2 GB RAM each = $200 million/month

- With Projects: 10 million users × (1 GB RAM + 1 GB disk) = $101 million/month Monthly Savings: ~$99 million (~49.5% reduction)

Refining for Average Usage Patterns:

Considering that only about 50% of users are active at any given time, the monthly savings settle at around $49 million—figures that align with reported reductions and underscore the real-world impact of Projects.

Opportunity Costs and Token Savings

Beyond raw infrastructure savings, the Projects feature streamlines user workflows. By storing context persistently on disk, repetitive efforts—such as reloading files, re-explaining instructions, and regenerating context—are cut by roughly 50%. This efficiency boosts productivity and reduces unnecessary token consumption, directly benefiting both OpenAI and its users.

Scalability and Enterprise Adoption

These cost and productivity gains enable OpenAI to scale efficiently, accommodating more users without proportionally increasing hardware spending. As integrations with enterprise tools like Slack and GitHub become more seamless, the Projects feature emerges as a critical differentiator—particularly for large organizations seeking to maximize value. With half the token wastage, teams can focus on higher-level tasks and strategic initiatives rather than routine context management.

User-Centric Value

The financial benefits of Projects go beyond reduced overhead. Reinforcing user-centric innovation, these savings empower OpenAI to invest in improved features—such as real-time collaboration tools—while maintaining competitive pricing. The end result is a more inclusive, accessible platform that efficiently meets the diverse needs of its user base.

Workflow Optimization: Best Practices

OpenAI’s Projects feature streamlines complex, iterative workflows by leveraging persistent context storage and disk I/O, significantly reducing redundant tasks and token usage. By focusing on best practices, professionals—from technical writers to product teams—can ensure that each project iteration builds upon previous work, cutting overhead costs and enabling more efficient collaboration.

How to Use Projects Effectively

Token Usage Reduction:

In traditional workflows, token consumption can skyrocket due to repetitive uploads, re-explaining instructions, and maintaining context. For a busy professional, this can total more than 16 million tokens annually. With Projects, persistent context reduces token usage by approximately 50%. For example, a technical writer drafting a comprehensive manual no longer needs to repeatedly re-upload reference materials or re-establish context, allowing for sustained focus on new content creation.

Improve Collaboration:

Persistent storage ensures that multiple team members can seamlessly build on shared files without needing to reload or restate context. In a development environment, one contributor might update code snippets while another reviews historical changes. This synchronized approach eliminates versioning confusion and redundant work, ensuring that updates remain current and accessible to all collaborators.

Key Cost Factors

Without Projects Feature

- Repetition and Token Waste:

Continually re-establishing context inflates token usage. Over an estimated 250 workdays (with roughly four conversations per day), token consumption can reach about 16,250,000 tokens annually. - Manual Effort and Time Costs:

Reorganizing files, duplicating efforts, and ensuring consistency across versions leads to lost productivity. Professionals spend valuable time on routine context management instead of strategic, value-added tasks.

With Projects Feature

- Persistent Context Storage:

By halving token usage—from ~16,250,000 tokens to ~8,125,000—persistent context storage reduces both costs and complexity. This streamlined approach allows users to pick up exactly where they left off, no matter how long it’s been since the last session. - Improved Productivity:

Instead of repeatedly setting the stage, teams can dive directly into content creation, code iteration, or research analysis. The result is more efficient allocation of time, as well as reduced token-related expenses.

Token Usage and Cost Estimates

Current Workflow (No Projects):

- Annual Token Usage: ~16,250,000 tokens

- Costs (Input: $0.0015/1,000 tokens; Output: $0.002/1,000 tokens): ~$28.44 total annually

With Projects Feature:

- Annual Token Usage: ~8,125,000 tokens (~50% reduction)

- Estimated Costs: ~$14.22 total annually

These figures, although modest for an individual user, scale significantly at the enterprise level and when factoring in the reclaimed time and reduced cognitive overhead.

Best Practices for Leveraging OpenAI Tools

-

Incremental Refinement and Collaboration:

- Upload foundational materials early, providing objectives, outlines, or drafts right from the start.

- Use iterative updates to incorporate new findings, highlight evolving priorities, and make context-aware revisions.

- Persistent context within Projects ensures no effort is lost across sessions.

-

Markdown as the Default Format:

Markdown (.md) outputs are both the default and the most compatible with OpenAI’s environment. Keeping your files in Markdown ensures consistent formatting and simplifies downstream processes. Converting directly to PDF may pose formatting challenges, so it’s best to refine in Markdown and then export as needed. -

Average Token Usage per Conversation for Writers:

A single conversation might consume 10,000 to 35,000 tokens (average ~20,000 tokens). With ~4 conversations per day over ~250 workdays, token usage can total ~20,000,000 tokens annually. Leveraging Projects to maintain context reduces this figure substantially, enabling users to focus on high-quality output rather than routine maintenance tasks.

Practical Example: AI-Powered Collaboration in Action

OpenAI’s Projects feature has already proven invaluable for users across various industries. For instance, a publishing firm transitioned to OpenAI’s Projects to streamline its editorial workflow. The firm’s editors could now maintain historical context for book drafts without repetitive backtracking, resulting in a 40% reduction in editing time. Similarly, a software development team leveraged persistent storage to manage evolving codebases, ensuring seamless collaboration between developers across different time zones. For more details, see the Case Study.

Real-World Benefits of AI Collaboration

What Are Real-World Benefits of Projects?

- Before Projects: A researcher managing iterative tasks consumed ~35,000 tokens/session.

- With Projects: Persistent context management reduces token use by 50%, freeing time for higher-value tasks.

Case Study:

- A novelist drafts a 300-page manuscript, maintaining style and tone without constant backtracking.

- A developer optimizes coding workflows by accessing historical project files seamlessly.

Frequently Asked Questions (FAQ)

What is OpenAI’s Projects feature?

Projects allow users to manage long-form workflows by associating conversations with files and storing context persistently using disk I/O.

How does Projects improve workflow optimization?

By enabling persistent storage, Projects eliminate redundant uploads and re-contextualization, allowing users to focus on higher-value tasks.

How does OpenAI save $49 million monthly with Projects?

OpenAI reduces infrastructure costs by offloading context storage from RAM (expensive) to SSD storage (affordable), cutting monthly expenses nearly in half.

What are the benefits of disk I/O and persistent storage?

Disk I/O reduces memory usage while ensuring context is always accessible, enabling scalable and cost-efficient workflows. Users previously consumed approximately 16,250,000 tokens annually in repetitive tasks. By leveraging persistent context, Projects reduces this usage by 50%, translating into significant cost savings. This decrease also highlights the reduction of redundant interactions, optimizing both efficiency and financial expenditure.ws.

Why It’s a Win Win

OpenAI’s Projects feature doesn’t just streamline workflows and enhance productivity—it creates mutual benefits for both users and the platform itself. By improving operational efficiency, retaining context, and cutting down on repetitive work, Projects ensures that creators, developers, and researchers can achieve their goals faster and more consistently. At the same time, OpenAI can reduce infrastructure costs, scale more affordably, and reinvest savings into even better features. The result is a stable, long-term foundation for AI-driven content creation and collaboration, making it a win-win scenario for everyone involved.

User Advantages

- Reduced Cognitive Load: Persistent context eliminates the need to constantly restate instructions or re-upload files, saving time and mental effort.

- *Cost and Token Savings**: By halving token usage and preventing unnecessary repetition, users spend less on tokens, freeing up resources for core creative and strategic tasks.

- Professional Scalability: Whether drafting lengthy manuscripts or managing complex codebases, professionals can easily scale up their projects without workflow bottlenecks.

OpenAI’s Gains

- Lower Operational Costs: By offloading context storage from RAM to disk I/O, OpenAI saves significantly on infrastructure expenses, enabling them to deliver top-tier services at competitive prices.

- Resource Optimization: More efficient data management allows OpenAI to handle increased user demand without sacrificing performance, ensuring a seamless experience for all users.

- Reinvestment in Innovation: With lower overhead, OpenAI can focus on developing new features, improving model capabilities, and enhancing integrations, thus continually elevating user satisfaction.

In essence, Projects bridges the gap between complex, long-form tasks and efficient, user-friendly AI workflows. As professionals enjoy more fluid, consistent output at lower costs, OpenAI simultaneously reduces infrastructure spending and fosters an environment ripe for ongoing innovation. This synergy guarantees a stable, beneficial ecosystem that keeps growing more valuable with time—a genuine win-win for creators, collaborators, and the platform powering them.

Conclusion

OpenAI’s Projects feature is a transformative solution for anyone managing complex, long-form workflows. By seamlessly integrating persistent context storage, disk I/O efficiency, and strategic token usage reductions, Projects allows creators, developers, researchers, and businesses to streamline their processes and drastically improve overall productivity. Instead of spending valuable time and resources repeatedly re-establishing context, users can now maintain coherence across vast, evolving documents, codebases, and research datasets—all within a single, intuitive interface.

Discover workflow optimization with OpenAI’s Projects and take the next step towards more efficient, scalable solutions for your professional or enterprise needs. Projects feature is a transformative solution for anyone managing complex, long-form workflows. By seamlessly integrating persistent context storage, disk I/O efficiency, and strategic token usage reductions, Projects allows creators, developers, researchers, and businesses to streamline their processes and drastically improve overall productivity. Instead of spending valuable time and resources repeatedly re-establishing context, users can now maintain coherence across vast, evolving documents, codebases, and research datasets—all within a single, intuitive interface.

Beyond these immediate workflow optimizations, the financial impact on both OpenAI and its user base is substantial. With 50% reductions in token usage, significant infrastructure savings, and more affordable scaling options, Projects introduces a new era of cost-effective AI collaboration. The ability to maintain context over extended periods not only enhances productivity and reliability but also makes advanced AI services more accessible to a broader audience. This creates a positive feedback loop: as OpenAI invests in more user-centric features, individuals and enterprises benefit from higher-quality outputs, competitive pricing, and smoother integrations with everyday tools.

From technical writers ensuring narrative consistency in long manuscripts to developers maintaining evolving code repositories, OpenAI’s Projects feature revolutionizes how we interact with AI-driven workflows. As organizations continue to seek out efficient, scalable, and cost-effective solutions, Projects stands out as a pioneering innovation—one that aligns with the growing demand for long-form content management, robust enterprise adoption, and sustainable AI-driven workflow optimization. The result is a powerful, future-proof framework that empowers users to create, innovate, and collaborate at unprecedented scale.

Glossary

-

Disk I/O The process by which data is read from and written to disk storage, enabling efficient management of large data sets while reducing reliance on live memory. For instance, a novelist drafting a 300-page manuscript can maintain a consistent narrative without reloading earlier sections. Similarly, a researcher handling multiple datasets benefits from seamless data retrieval, ensuring cohesive analysis across projects. (RAM).

-

Persistent Storage A storage system that retains data across sessions, ensuring that historical context or project information remains accessible for long-term workflows.

-

Token Usage A measure of the input and output data processed during an AI session. It directly impacts the cost and efficiency of interactions with AI tools.

-

Workflow Optimization The process of improving efficiency and productivity in managing iterative and complex tasks, often through tools or strategies. What steps are required for users transitioning to disk I/O workflows? Transitioning to Projects may require adapting workflows to incorporate persistent storage effectively. Users can leverage OpenAI’s documentation and support resources to familiarize themselves with this feature, ensuring a seamless migration from traditional RAM-intensive methods. like OpenAI’s Projects.

-

Scalability The ability of a system to handle increased workloads or support more users without degrading performance or requiring significant additional resources.

-

RAM (Random Access Memory) A type of volatile memory used for fast, temporary data storage during active processes. RAM is expensive compared to other storage options.

-

SSD (Solid-State Drive) A type of non-volatile storage that uses integrated circuits to store data persistently. SSDs are faster and more cost-efficient than RAM for long-term data storage.

-

Context Window The limit on the amount of information an AI model can process at a time, measured in tokens. Exceeding this limit requires splitting tasks into smaller segments.

-

Infrastructure Costs Expenses associated with hardware and software systems can evolve further as the Projects feature expands. Future iterations might include integrations with live editing tools or API extensions for real-time collaboration, enabling OpenAI to solidify its position as the premier platform for complex, scalable workflows. the hardware and software systems that power AI tools, including RAM, disk storage, and other operational requirements.

References

- OpenAI Official Documentation

- OpenAI API Reference

https://platform.openai.com/docs/api-reference/introduction - OpenAI Models and Pricing

https://openai.com/pricing

- OpenAI API Reference

- GPT-4 Technical Report

OpenAI (2023). “GPT-4 Technical Report.”

https://openai.com/research/gpt-4 - Disk vs. RAM Cost Analysis

General market analysis for enterprise hardware and storage solutions, such as:

- IDC (International Data Corporation) Reports on Enterprise Storage Costs

- AWS EC2 and EBS Pricing Guides (for comparative cloud storage costs)

https://aws.amazon.com/ec2/pricing/

- Software Engineering Best Practices for Persistent Storage

- Fowler, M. (2012). Patterns of Enterprise Application Architecture. Addison-Wesley Professional.

- Evans, E. (2003). Domain-Driven Design: Tackling Complexity in the Heart of Software. Addison-Wesley Professional.

- Workflow Optimization and Productivity Studies

- Covey, S. R. (1989). The 7 Habits of Highly Effective People. Free Press.

- Nielsen, J. (1993). “Iterative User Interface Design.” IEEE Computer, 26(11):32–41.

Related Sources

- Dan Sasser ‘s Blog: An informational and instructive tech blog

Check in with Dan to keep up to date on the latest tech trends and to learn about a wide variety of topics. - OpenAI Research: The Future of AI and Its Impact on Workflow Management

OpenAI’s official insights into the capabilities and advancements of their tools for content creation and professional workflows. - Optimizing AI Workflows: The Role of Persistent Context and Data Management

An in-depth look at how persistent context and intelligent data management systems can improve workflow efficiency in AI-driven environments. - Tokenization and Its Impact on AI Efficiency

Learn more about token management in AI models and how optimizing token usage can drastically improve cost and performance. - The Power of Disk I/O and Cloud Storage for Scalable Applications

A guide to how disk I/O and cloud storage solutions can enhance scalability and efficiency, especially in high-demand environments. - AI Workflow Automation: Best Practices and Real-World Applications

This source discusses the growing field of AI automation, offering case studies and examples of how AI tools like OpenAI are revolutionizing industries. - Scalable and Cost-Effective Infrastructure for Enterprises

An article on how businesses can balance cost and scalability while optimizing workflows through cloud-based infrastructure and storage solutions. - Improving Developer Efficiency with AI-Driven Tools

An exploration of how developers are leveraging AI tools to optimize coding workflows, streamline development cycles, and boost productivity.

Support My Work

If you enjoyed reading this article and want to support my work, consider buying me a coffee and sharing this article on social media using the social sharing links! Also check out my GitHub page at GitHub.com/dansasser